In this article, I am jumping into the AI discourse to discuss one of my fascinations: How do we apply human ethics in the AI era?

AI is much more than a technology journey. It’s a cultural human journey. To me, ethics isn’t optional. It’s been woven into the fabric of our society since it’s very dawn. From ancient philosophical traditions to modern human rights law, ethics helps us ask: How should we live together? It shapes our laws, institutions, norms, and expectations of fairness, dignity, accountability, and trust.

Over time ethics has evolved. Ethical debates have occurred on whether we should focus on virtuous character, or on rules and consequence. It’s a personal choice as to which school of thought you lean towards. And to date, for the most part, we haven’t truly experienced the impact of AI to factor in this lens. AI is reshaping how we work, what we trust, and how we hold institutions accountable. Yet at its core, AI is a tool, and tools require ethical direction.

As societies become more complex, so do our ethical demands. We require transparency in government and the protection of privacy, we want the balance between innovation and risk, we need to ensure we don’t ignore marginalised voices, and at all times we must avoid doing harm. These are no longer abstractions but urgent expectations. Ethical systems must evolve to face technological, ecological and societal change.

AI’s friction with traditional ethical application

AI forces us to revisit how we apply ethics in real, dynamic systems. Some potential points of friction I’m thinking about are:

- Opacity and Explainability: Many AI systems (especially “black box” models) are hard to explain and understand for the layman. Yet traditional ethics often demand that decision-makers explain their reasoning. When algorithmic decisions affect people’s lives, the question arises: How do we hold a system accountable when its internal logic is opaque?

- Scale and Automation: AI can automate decisions at scale. A single misalignment or bias gets magnified rapidly. What might have been one human error now becomes systemic. How easy is it for ethical oversight to scale?

- Delegated Responsibility and Diffusion of Blame: When humans “outsource” to AI, who is responsible? Is it the developer, the vendor, the operator? We risk diluting human accountability leading us into a responsibility vacuum. Who does the buck stop with?

- Bias, Fairness and Hidden Assumptions: AI systems may reflect accumulated historical biases. Left unchecked, they can unintentionally perpetuate discrimination. The ethical burden becomes one of continuous audit, feedback, correction and inclusivity. Are organisations sufficiently equipped to understand and resource this need?

- Changing Notions of Consent, Privacy and Data Agency: AI thrives on data, this includes personal, behavioural and contextual. Traditional ethical frameworks respect individual consent, data minimisation – collecting only what’s necessary, anonymity and purpose limitation using data for only the stated reason. This conflicts with AI’s appetite for vast, diverse data. We must rethink what meaningful agency over data means. How will organisations and policy balance the tension between public and private knowledge?

- Contestability in Outcomes: When an AI system influences or determines outcomes (eligibility, benefit, permit, risk score), people must have recourse. Who is accountable to ensure ethical design bakes in dispute and review pathways?

AI should not ignore human ethics. It pressures us to embed new guardrails, governance, continuous oversight, human-in-the-loop mechanisms, and a culture of humility and vigilance.

Why ethics matters even more in the public sector:

AI can transform how we deliver public services, but with great power comes great responsibility. While ethical AI is important everywhere, it’s especially critical in the public sector, where decisions can directly impact people’s lives, rights, and trust in government.

Here’s a quick list of key considerations for the public sector:

1. Trust and Social Licence: Citizens expect government to act in the public interest, transparently, fairly, and with accountability. The public sector holds a social licence and misuse of AI risks eroding trust faster and deeper than in private sectors.

2. Governments hold significant power: Governments hold authority, enforcement, regulation, taxation, welfare and licensing. Decisions backed by AI can have profound consequences on rights, freedoms, benefits, and burdens. Ethical failings here are not merely reputational, they can cause real harm to our community.

3. Public Accountability, Oversight and Law: Public agencies have to answer to parliament, courts, audit bodies, and the electorate. They operate within administrative law, human rights obligations, privacy obligations, freedom of information frameworks, and public interest mandates. Ethics must align with these structural constraints. AI information and assisted decisions may not always be taking all of this into account.

4. Equity, Inclusion and Public Good: Public agencies focus on fairness and inclusion across the entire community, including vulnerable groups. The public sector’s metric is broad including public trust, and equity. Success isn’t just efficiency, it’s better social outcomes. The private sector often measures success by profit or efficiency. If a social outcome focus is missing from the private sector AI tool or its output, misalignment has already occurred.

5. Long term Horizons and Stewardship: Governments are our stewards of the future. They consider intergenerational equity, sustainability, institutional legacy, and societal resilience. AI systems need to be built with these and with adaptability in mind, not just short-term wins. As we start to compete in the AI race are we unintentionally sprinting early only to fall short of the true finish line?

The Good News? Public and private sectors share many ethical values like fairness, transparency, and accountability. But in government, the context and consequences are different. That’s why it’s so important to have open conversations about how AI is developed and used, and which values are front and centre in our combined AI partnership.

Fostering ethical AI use through public-private collaboration

When government agencies partner with private vendors on AI initiatives, the goal should be to build a shared commitment to ethical outcomes. Rather than public sector taking solely a compliance or penalty focussed approach, and private sector being too focused on efficiency and profit, instead we can take a collaborative approach that supports transparency, accountability, and mutual learning.

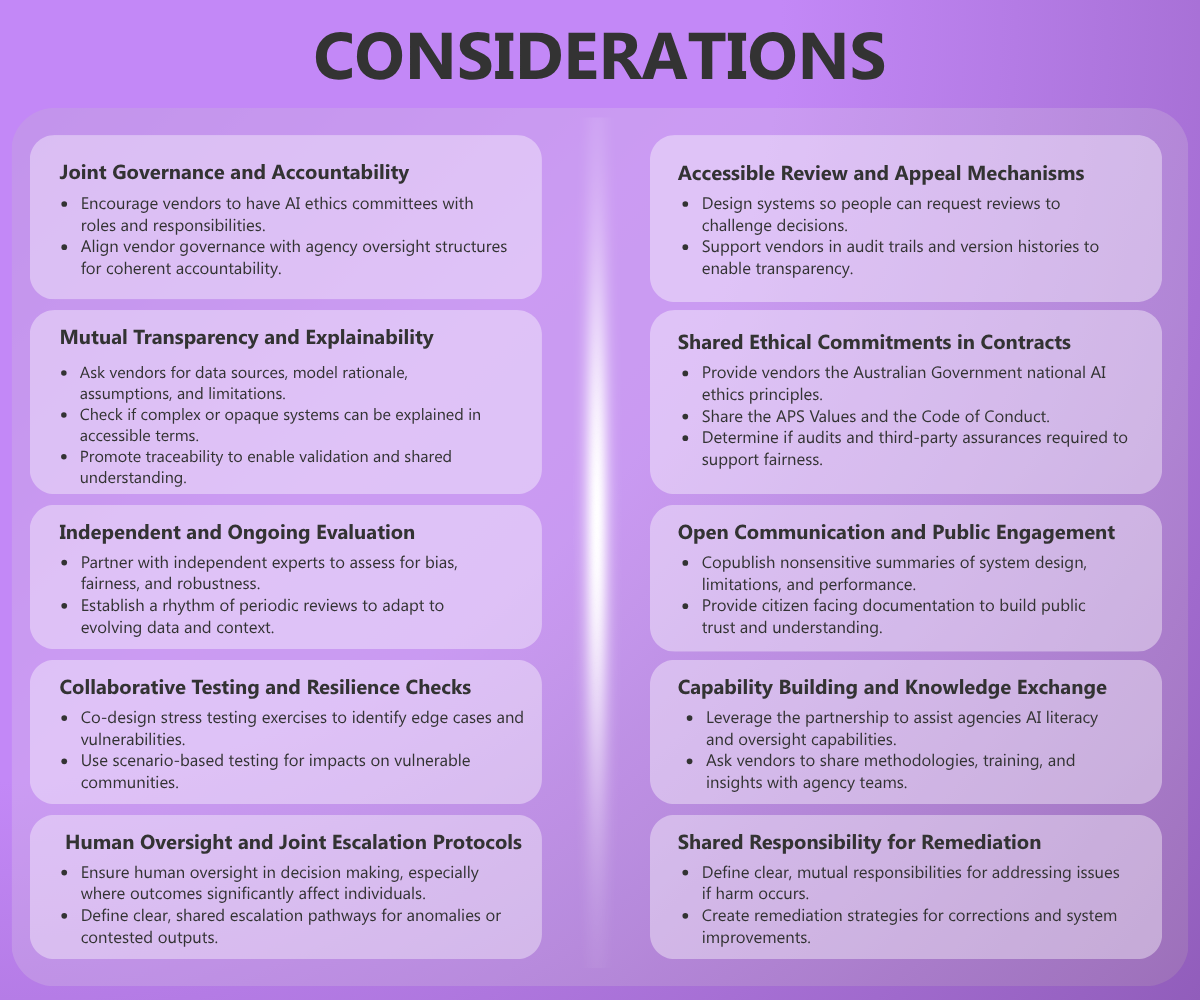

Let’s look at 10 key partnership tips:

By agreeing upfront the ethical core values and principles we are guided by, government agencies and vendors can co-create AI systems and user experiences that are not only effective but also fair, transparent, and aligned to society outcomes. This approach helps ensure that ethics are not outsourced but shared, upheld, and continuously improved together.

To summarise:

- Ethics is foundational to civilisation, trust and civil society; it cannot be an afterthought in AI.

- AI strains traditional ethical application. Opacity, scale, bias, diffusion of responsibility, contestability, and evolving notions of consent.

- The public sector’s nature of trust, enforcement, accountability, public good and long horizons raises the stakes higher.

- Australia already has frameworks such as the Australia Government AI Ethics Principles, APS Values and Code of Conduct. These must be operationalised, not merely recited. All private sector vendors should deeply understand these.

To sum it up, it requires a call to ethical leadership. As AI becomes ubiquitous, we require all organisations, public and private to not outsource our moral compass. To quote my colleague Ryan Darby, one of Sensei’s resident AI guru’s, “AI doesn’t have feelings, but humans do. We need to use ethics to protect ourselves and others, to take pride in what makes us human.”

So my question is, how have you considered the above tips, and how will you incorporate them into your way of working? How will you hold yourself, and others accountable to delivering an ethical AI future?