Security of data, cybersecurity and the potential for letting our organisations data get into the wrong hands is a valid fear for many people. When AI is involved, this concern gets raised again. The real issue is that AI is not the problem – people are.

The understanding of data security in relation to AI is generally poor. People don’t know if the AI solutions they are using are secure or not. In response to this issue, some organisations have locked down AI, but this may not be the best approach. Using the right AI for the right situation is the key. In this article we will look at the extent of this problem, how Microsoft 365 Copilot and Altus security works, and why it is secure. We will give you a matrix to help you work out if the AI service you are using is secure or not.

The reason to address this is that the most frequent question we are asked when discussing artificial intelligence with our clients is: is it secure? This is asked because there is a fear of cyber security incidents in the modern world (a justifiable fear), as well as a lack of understanding about how AI security and data protection works. We work with our clients to understand the security of their data and reassure them.

But then this happens: The New South Wales Reconstruction Authority recently made the news when a “former contractor” uploaded personal data to ChatGPT. An investigation is ongoing which you can read about here.

Before we smack our foreheads and ask what was wrong with that person, let us think about how this could have happened. And honestly reflect on how many of you reading this have done and are doing exactly the same thing!

The National Cybersecurity Alliance has determined through a survey that nearly 40% of you are uploading data to unsafe versions of AI. You can read about it and the other issues prevalent in many organisation’s AI implementations here.

You may still be wondering how this could happen. Well, AI is there to make us more productive. So, people try to do the right thing and use the tools at their disposal. And free tools are the most available, and ChatGPT is one of the most well-known. Forbes has provided an opinion piece on how secure your data is if you load it into ChatGPT (do not do this by the way!) which you can read about here.

The bottom line is your data can be used to improve how ChatGPT and other non-Microsoft 365 Copilot services work. Now that you are aware of that, how does that fit in with what you know your organisation’s data policies to be? And common sense? Are you comfortable providing client or sensitive data to another organisation? And what can you do instead?

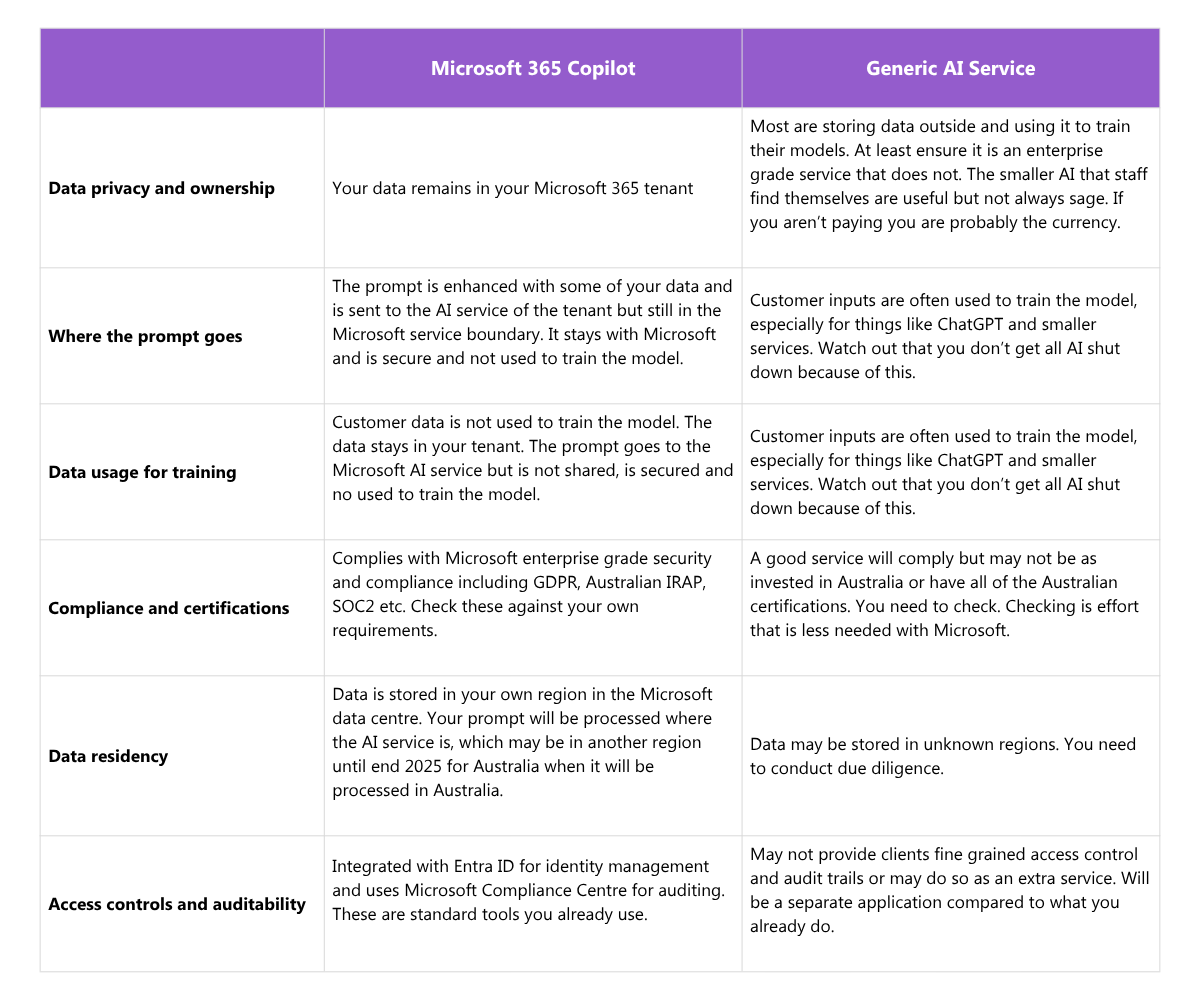

First, recognise that people are the problem not AI. People use the wrong AI for the job. But which AI should they use instead? The obvious answer is to use a service that is secure but how do you work this out? The table below will help you decide if the AI service you are using is the right one, specifically comparing Microsoft 365 Copilot to other services.

Microsoft 365 Copilot is secure by design. Its purpose built for organisational use and those organisations require their data to be secure. You are currently comfortable that your Microsoft services and data are secure. Microsoft 365 Copilot resides within that. You can see the details here.

Microsoft 365 Copilot protects organisational data through enterprise data protection that enforces user-based access controls, encryption at rest and in transit, and no use of organisational data for AI model training. Copilot respects existing Microsoft 365 security policies, such as Microsoft Purview sensitivity labels, and ensures data isolation between customers, keeping data within the Microsoft 365 environment and not sending it to public servers.

So, if Microsoft 365 Copilot is secure, why are people using non-secure services? This is because of their perceptions about how useful they are. In the past there has been a likely valid perception that ChatGPT is more advanced than Copilot. But Microsoft 365 Copilot now gives you the option to use GPT5, which is the same underlying model ChatGPT5 is using. But Microsoft includes a routing layer which decides which model is the best to use for the task. In the past ChatGPT was often used as Copilot was felt to be behind in capabilities, but Copilot has steadily improved and should be looked at again, especially since it keeps your data in your tenant. With ChatGPT or another service you must load your data into it. Microsoft 365 Copilot already has access to your data in your tenant which makes it even easier to use.

If Altus and Microsoft 365 Copilot are secure, is that all you need? It isn’t that simple. There will be times when other AI may be relevant to use, especially if it is specialised. Some organisations lock anything else down. Others are more open. Either way a holistic approach is needed which consists of:

- Training to help your staff understand how AI works and why it is potentially risky to use something free.

- Policies to govern what is used and how.

- A review and standardisation of tools for each team, which can also be used to help expose them to tools that make them more productive for their work type, and help them understand the risks.

- And more education. It needs to be ongoing, and it needs to be incorporated into your existing security awareness training.

AI can help you be very productive, and in time will change how we use tools such as Altus. We do need to understand what happens to our data when we use these tools. With the paid Microsoft tools and Altus, you can be assured that your data is secure.

For more information, get in touch with us today!